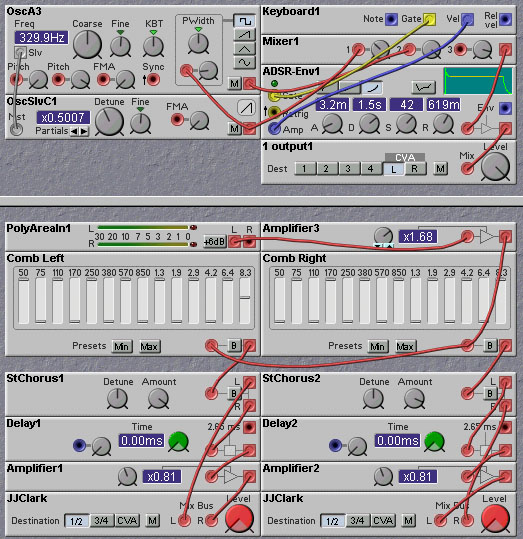

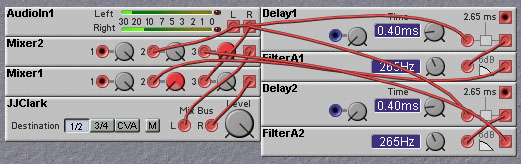

Figure 14.1. A patch illustrating the use of IIT and ITD cues in spatial localization of a sound (A. Browl).

The two most important localization cues are the interaural time difference, or ITD, and the interaural intensity difference or IID. The IID is what most synthesizer users are familiar with, and arises from the fact that, due to the shadowing of the sound wave by the head, a sound coming from a source located to one side of the head will have a higher intensity, or be louder, at the ear nearest the sound source. One can therefore create the illusion of a sound source emanating from one side of the head merely by adjusting the relative level of the sound that are fed to two separated speakers or headphones. This is the basis of the commonly used pan control, such as the Nord Modular pan module.

The interaural time difference is just as important, if not more so, in permitting the brain to perceive spatial location. This time difference arises from the difference in distance between a sound source and the two ears. Since the sound travels at a constant velocity (under usual listening conditions) this distance difference translates into a time difference. A sound wave travelling from a sound source located on your left side will reach your left ear before it reaches your right ear. As the sound source moves towards the front of your head, the interaural time difference will drop and become zero when the sound source is centered between your ears. The maximum interaural time difference depends on the width of your head and on the speed of sound in your listening environment. If we take the the speed of sound to be 330 meters/second, and the distance between your ears to be 15 cm, then the maximum interaural time difference will be about 0.45 msec. Fortunately, the Nord Modular Delay module can easily provide this range of time delays, so that it is straightforward to implement the ITD spatialization cues with the Nord Modular.

The following patch, courtesy of Anig Browl, illustrates basic sound source localization using both IID and ITD cues. The important elements of this patch are the Pan module (which provides the IID cue) and the Delay modules (which provides the ITD cue). The patch also includes a filter which models the reduction in high frequency content of a signal when it is located behind the head.

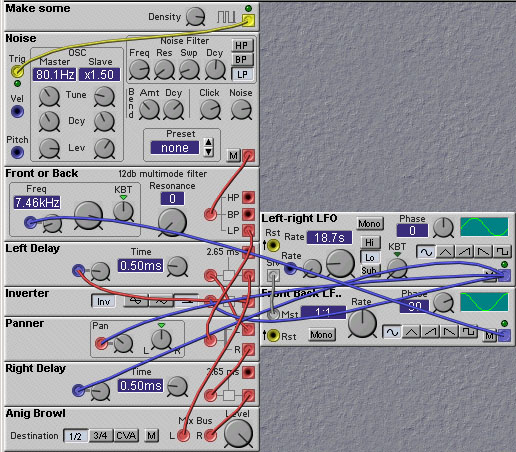

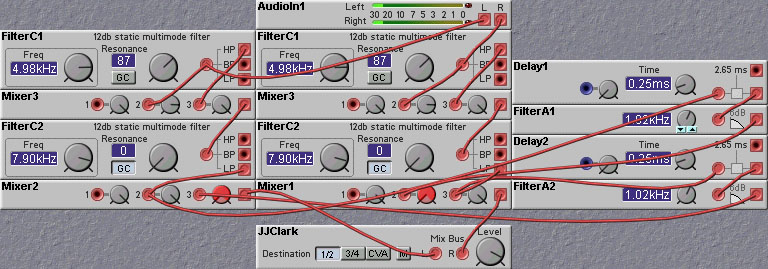

The spatialization model used in the above patch is very simple. In actual practice the IID and ITD values depend on the frequency of the sound source. For example, the ITD is largest for low frequencies and rolls off at around 1KHz to a value about 1/2 its low frequency level at around 5KHz. IID is more important at high frequencies. This is because the low frequencies are not attenuated very much by the head, while the high frequencies begin to get attenuated by the head shadow around 2KHz. This head attenuation actually begins to be reduced around 5KHz, due to conduction of sound along the skin surface. Thus, there is a peak in the response in the far ear centered around 5KHz, after which the response falls away once again. Based on these details, we can make a more realistic IID and ITD based spatialization patch, as shown in the following patch.

The structure of the ear itself is constructed so as to aid in the localization of sound sources. The outer ear structure, which is refered to as the pinna, filters the sound in ways which depend on the direction that the sound impinges on the ear. All of the folds, which give each ear its own distinctive shape, reflect and absorb sound waves. The reflected waves interfere or support each other in a wavelength dependant manner, creating the filtering effect. The brain can use the pinna-induced spectral changes to extract additional localization informations. Note that, unlike the IID and ITD cues, which the pinna related cues can be used to provide information about the vertical localization of sound sources. That is, one can get information about whether sounds are coming from sources above or below the head. When people move their heads they get slight changes in the various cues that permit localization. Many animals (and some people!) can even move their ears, which can produce changes in the way in which the pinnae interact with the incoming sound. These slight changes themselves provide extra information that the brain can use to improve localization. These subtle, but important, motion effects are usually not reproduced in most synthesizer listening situations, due to the lack of sensing of head motion. This does not mean that such a thing is not possible. There are many devices on the market that can be used to sense head position. You can even try building your own! These devices could be modified or enhanced so as to render their outputs in the form of MIDI controller messages, which can then be used to adjust the spatialization parameters (IID, ITD) in a Nord Modular patch.

In addition to the filtering action of the pinna structures, the ear canal itself acts as a resonant filter, with a peak at around 5KHz. The frequency and position dependent characteristics of the pinnae and ear canal are usually summarized in the form of what is called the Head-Related Transfer Function or HRTF for short. These transfer functions describe the frequency response of each ear for sound sources at various locations. The transfer functions are obtained by doing acoustical measurements with microphones embedded in the ear canal of individual listeners, or microphones implanted in models cast from the ears of listeners. Clearly, doing these measurements for every possible listener of your music is impractical, so designers of spatialization systems have relied on using generic models obtained from a small set of test listeners. The results obtained with these generic models are not as convincingly realistic as with individually tailored models, but are still an improvement over the more basic approaches that do not model the influence of the pinna at all. From the point of view of implementing HRTF models with the Nord Modular, the news is not good. The HRTFs, even the generic ones, are quite complicated, with many little peaks and valleys in the response curve. It is these little variations that give the accurate spatialization, so approximating the HRTF by simpler filters does not yield realistic results. Another difficulty is that HRTF data is hard to come by - it is difficult to make your own measurements (but feel free to try!) and companies that have their own generic models tend to guard them jealously and don't give out the details. We will discuss some alternatives, however, in the next section.

Virtual Speaker Simulation in Headphones

As most readers will know, listening to music through loudspeakers is a much different experience than listening to it through headphones. Part of the reason for this is that people usually don't play their loudspeakers loud enough to hear all the details which they pick up on headphones. More importantly, however, it is difficult to provide convincing spatialization with headphones using the simplistic IID spatialization techniques found on many recordings. Consider a sound panned hard left. In loudspeakers, the sound is perceived as coming from the left, and outside the body. In headphones, the same sound would be perceived as being located right at the left ear! Thus, a lot of research has been done in developing virtual speakers where headphones give the same aural impression as speakers located in some position (usually 30 degrees off center).

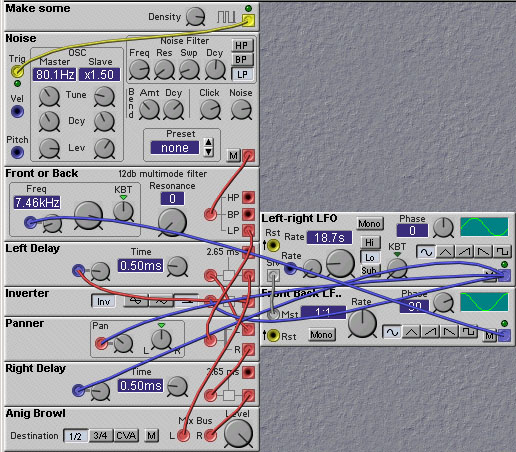

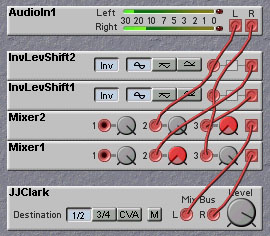

The first system that was developed to improve headphone spatialization was the crossfeed network developed by Benjamin Bauer in the 1960s. This system simulated the crosstalk that is present in normal hearing by mixing in a time-delayed (0.4 msec) and lowpass filtered (to 5KHz) version of one audio channel to the opposite channel. Since this system did not accurately model the frequency dependent pinna effects, it did not provide a completely natural sound localization, but did produce a sound that was spatialized to some extent and solved the problem of hard panned sounds being perceived as "inside" the headphone. A Nord Modular patch that implements this crossfeed system is shown below:

To improve upon the Bauer crossfeed system, we must take account of the HRTF of the ear structures. One of the advantages of merely trying to recreate the sound of a single speaker in a given spatial location, rather than the more general problem of placing a sound in an arbitrary location in space, is that only one HRTF need be used. This can be extended to the problem of surround sound by recreating the sound of four or five speakers. This would require four or five fixed HRTFs, which is still easier than computing suitable HRTFs on the fly for localizing sound sources at arbitrary positions. A simple approximation to a generic HRTF was implemented, in 1996, by Douglas Brungart (US Patent 5,751,817). In his system the HRTF was modeled on the HRTF measured in a dummy head 7 feet from a sound source 30 degrees off center. Brungart approximated the rather complex HRTF that was measured with a simple filter that had a resonant peak (with Q of 5) at 5KHz. This filter reasonably models the ear canal resonance but does not do a good job with the pinna effects. A patch with implements this simple HRTF filter is shown below:

Spatial Enhancers and Ambience Recovery

As previously noted, actual HRTFs are very complex and vary from person to person. So they are computationally expensive to implement and usually impractical to acquire. Alternative methods have therefore been sought. One of these alternatives is to perform ambience recovery. In ambience recovery the stereo sound field is widened by emphasizing the directional information contained in each channel. This directional information is primarily contained within the channel difference (L-R) signal. Because of this, we can increase the spaciousness of the sound simply by amplifying the L-R signal. The following patch illustrates implementation of this simple ambience recovery technique on the Nord Modular. It is similar to the Koss "Phase" brand of headphones, based on a design by Jacob Turner (US Patent 3924072) in 1974. The amount of crossfeed is set by Knob 1. An extreme clockwise setting removes most of the sound, leaving only the ambient information. An intermediate setting is best.

Reverberation and Spatialization

An important cue for spatialization is the presence of reflected sounds. In an enclosed environment the sound that reaches your ears from a sound source comes not only via the direct path from the source to your ear, but also via indirect pathways corresponding to reflections of the source sound waves off of objects in the environment. Since the precise timing and frequency response characteristics of these secondary (and tertiary...) sounds depend on the location of the sound source relative to the reflecting objects, and on the location of these objects to the listener, the reflections give addition cues as to localization. Modeling the reverberation effects is not just a simple matter of passing the source signal through a reverb unit and adding the result to the direct signal. The reflections will come from different directions and will thus have different time delays, amplitude, and frequency dependent variations. These position dependent effects are not modeled with standard reverb units. In any event, modeling the reflected components is beyond the current capabilities of the Nord Modular, as it lacks delay modules with the required delay times.

Spectral Diffusion

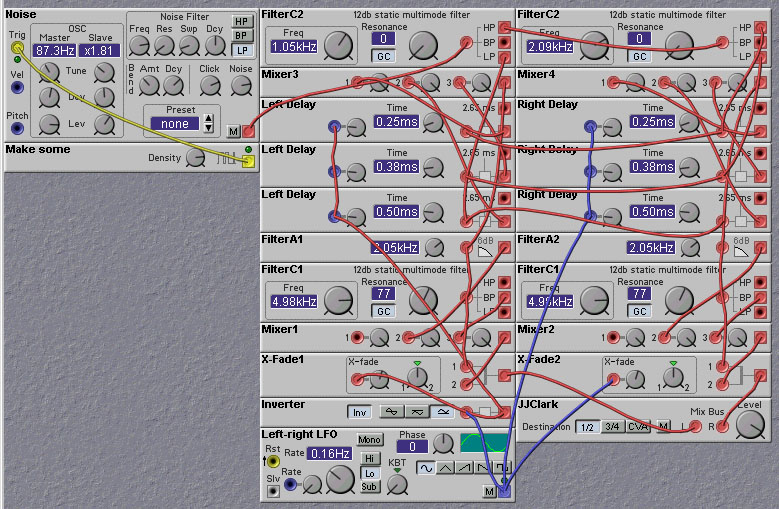

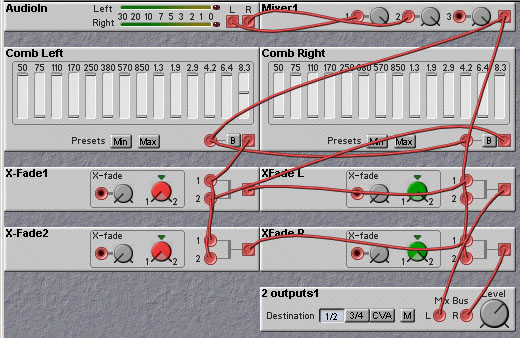

A popular sound processing technique is the "chorus" effect, whereby a single sound, usually rather plain and anemic, is phase- or pitch-shifted by a small time-varying amount and added to its original form. This gives the effect of having multiple independent sources of that sound being added together. The result is a much fuller and richer sound. We can obtain a similar effect with respect to spatialization. A mono source can be spread over the perceived space by causing various components of the sound to be positioned at different points in space. For example, one can apply a bank of narrow bandpass filters to the sound and place the outputs of each filter at different spatial locations (through simple panning, or through more complex localization schemes). This is demonstrated in the following Nord Modular patch, designed by Keith Crosley, in which a set of bandpass filter modules is used to decomposed the source signal, and the filter outputs are either panned hard left or hard right.

If you toggle between the dry mono sound and the spatialized sound you can immediately notice that this spatialization technique results in a much richer and fuller sound, similar in some ways to the chorus effect. Indeed, one can improve upon this approach by adding in time-delay cues to the panning, and applying chorusing to the individual components. This is done in the next patch. The resulting sound is even richer. Note the usage of the poly area. We can make use of this to increase the polyphony since the spatialization can (and should) be shared amongst the different voices.