Texture-Aware SLAM Using Stereo Imagery and Inertial Information

Simultaneous Localization And Mapping (SLAM) is one of the classic problems in robotics. Its solution enables a robot to create a map of its surroundings and at the same time localize itself within that map. One of the main reasons why SLAM is such a ubiquitus problem is that it enables convenient specification of planning with waypoint control, and navigation in cartesian coordinates, so that human operators can say goto(x,y,z, orientation). While this is not the only way to perform navigation, it has been the main driving force behind the progress made in this area over the last three decades. The other driving force behind SLAM has to do with the ability to build 3D maps of the environment in an online fashion and the ability to detect loop closures (detecting already visited places).

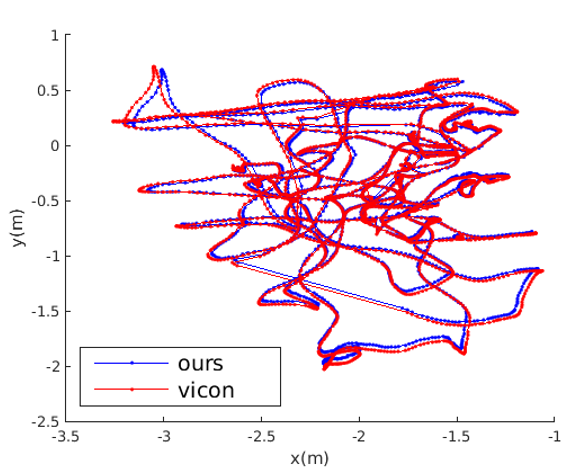

We have built a SLAM system that tightly combines measurements from a stereo camera and an IMU in real-time. This enables accurate depth estimation, handling of fast rotations, as well as knowledge of the gravity vector from the accelerometer, the gyroscope, and the magnetometer included in the IMU sensor. Our system extends ORBSLAM1 by tightly adding IMU measurements in the optimization, by adding stereo camera measurements differently than ORBSLAM2, and by modeling the uncertainty (covariance matrix) of estimated variables. We validated our system in a VICON-enabled lab as shown above, with an average translation error of 3cm, and by mapping an underwater shipwreck, as shown below:

In both of these testing scenarios the scene we are trying to reconstruct in 3D and localize against is both texture-rich and nearby, in terms of distance to the cameras. The first consideration leads to keypoint detection on the image and the second leads to successful triangulation. That said, not all scenarios are as good for SLAM as the two presented above. Consider for example the following images:

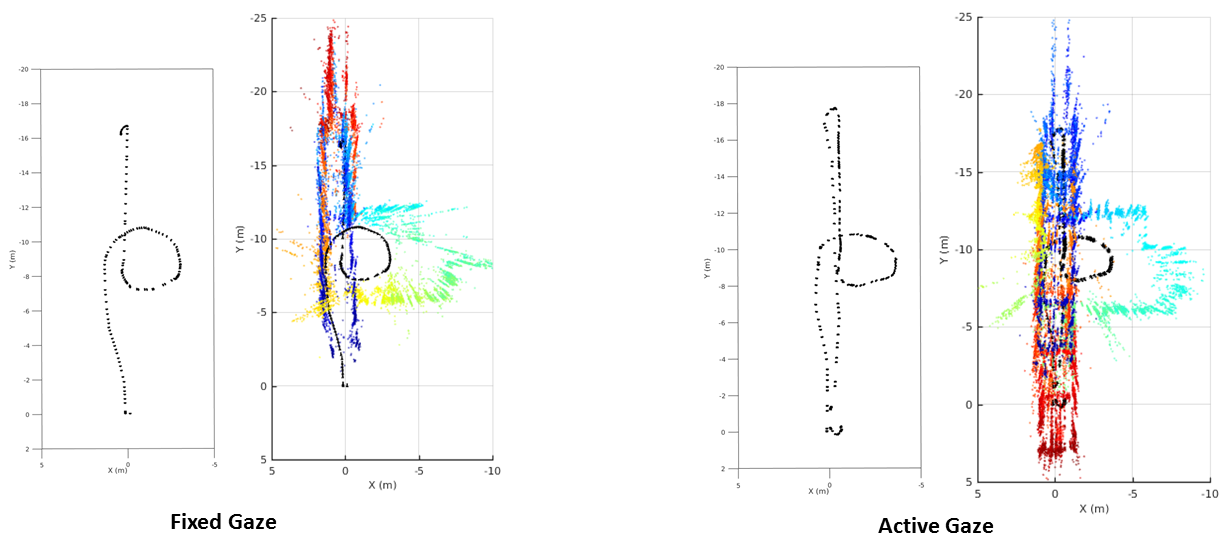

These problematic scenes cannot be fixed by designing a better algorithm; they are inherently bad inputs to the system that can make localization recovery impossible. This is why we decided to work on active-gaze SLAM where we actively direct the cameras to look at parts of the scene that are good for SLAM, in terms of texture and ability to triangulate. In order to achieve this we split the incoming images into patches and predict a patch score that favors small triangulation uncertainty and texture-rich areas of the environment. Then we direct the camera to look towards the patch with the highest score. The video below shows the system running in real-time outdoors, and the stereo and IMU sensor is mounted on a pan-tilt unit on a Husky ground vehicle. The image below it shows the result of running active-gaze SLAM on a Husky navigating indoors vs. having fixed gaze.