Talk Abstracts

I Feel the Earth Move (Under My Feet): Haptic Interaction for Telepresence and Information Delivery

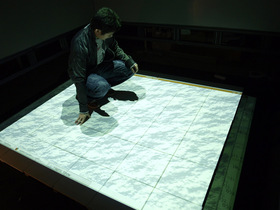

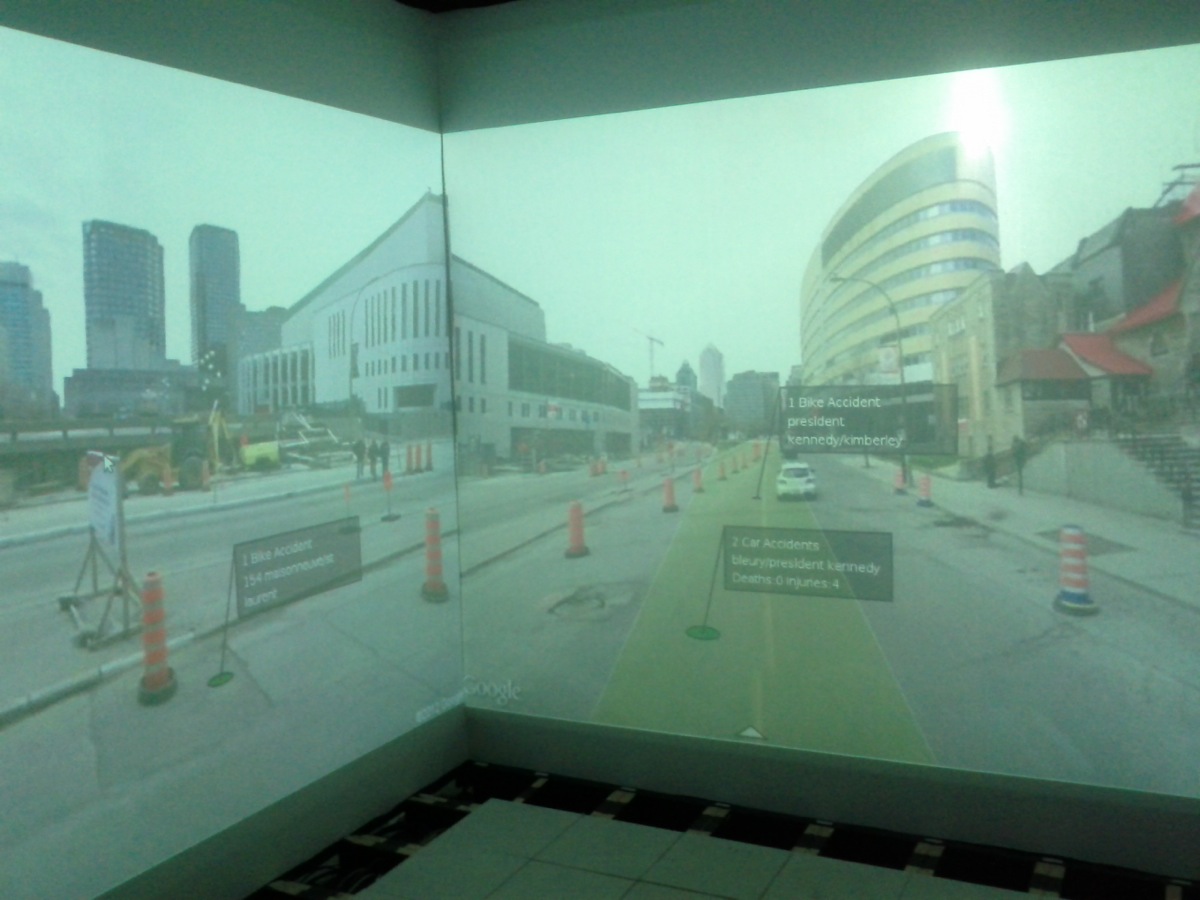

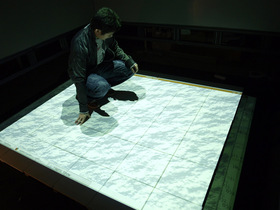

While telepresence technologies have largely focused on video and audio, our lab's research emphasizes the added value that haptics, our sense of touch, brings to the experience. This is not only the case for hand contact, but equally true for the wealth of information we gain about the world, through our feet, as we walk about our everyday environments. We have focused extensively on understanding properties of haptic perception, and use this knowledge to develop technologies that improve our ability to render information haptically to users, in particular, for tasks in which visual attention is occupied. This talk illustrates several examples, including immersive telepresence exploration of a city, simulation of the multimodal experience of walking on ice and snow, and our efforts to lower the noise level of the hospital OR and ICU by replacing audio alarms with haptic rendering of patients' vital signs.

While telepresence technologies have largely focused on video and audio, our lab's research emphasizes the added value that haptics, our sense of touch, brings to the experience. This is not only the case for hand contact, but equally true for the wealth of information we gain about the world, through our feet, as we walk about our everyday environments. We have focused extensively on understanding properties of haptic perception, and use this knowledge to develop technologies that improve our ability to render information haptically to users, in particular, for tasks in which visual attention is occupied. This talk illustrates several examples, including immersive telepresence exploration of a city, simulation of the multimodal experience of walking on ice and snow, and our efforts to lower the noise level of the hospital OR and ICU by replacing audio alarms with haptic rendering of patients' vital signs.

Enhanced Human: Wearable computing that transforms how we perceive and interact with our world

The growth of wearable computing brings with it opportunities to leverage body-worn sensors and actuators, endowing us with capabilities that extend us as human beings. In the scope of my lab's work with video, audio, and haptic modalities, we have explored a number of applications that help to compensate for or overcome human sensory limitations. Examples include improved situational awareness for both emergency responders and the visually impaired, treatment of amblyopia, balance and directional guidance, and visceral awareness of remote activity. These applications impose minimal input requirements due to their limited need for interaction by the mobile user. However, more general-purpose mobile computing, involving richer forms of manipulation of digital content, require alternative display technologies and motivate new ways of interacting with this information that break free from the limited real estate of small screen displays.

The growth of wearable computing brings with it opportunities to leverage body-worn sensors and actuators, endowing us with capabilities that extend us as human beings. In the scope of my lab's work with video, audio, and haptic modalities, we have explored a number of applications that help to compensate for or overcome human sensory limitations. Examples include improved situational awareness for both emergency responders and the visually impaired, treatment of amblyopia, balance and directional guidance, and visceral awareness of remote activity. These applications impose minimal input requirements due to their limited need for interaction by the mobile user. However, more general-purpose mobile computing, involving richer forms of manipulation of digital content, require alternative display technologies and motivate new ways of interacting with this information that break free from the limited real estate of small screen displays.

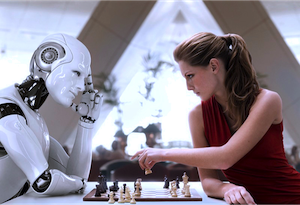

Is Humanity Smart Enough for AI?

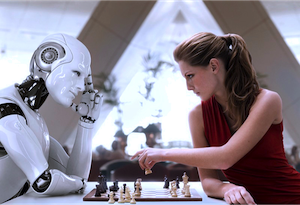

I summarize several concerns, as expressed by noted technology visionaries such as Bill Joy, Elon Musk, and Eliezer Yudkowsky, concerning the dangers, both short- and long-term, posed to humanity by artificial intelligence. I argue that Mark Weiser's warnings regarding the technologist's responsibilities when inventing socially dangerous technology are particular relevant in the domain of AI, given the combination of risks that this technology poses. Specifically, I cite our past failures in building complex systems that reliably work as intended, our increasing dependence on such complex (AI) systems, and the fact that when such systems don't work "as intended", bad things tend to happen. Most troubling, even if they do work as intended, AI systems can be extremely dangerous in the wrong hands, and things look worse if AI gets out of human hands.

I summarize several concerns, as expressed by noted technology visionaries such as Bill Joy, Elon Musk, and Eliezer Yudkowsky, concerning the dangers, both short- and long-term, posed to humanity by artificial intelligence. I argue that Mark Weiser's warnings regarding the technologist's responsibilities when inventing socially dangerous technology are particular relevant in the domain of AI, given the combination of risks that this technology poses. Specifically, I cite our past failures in building complex systems that reliably work as intended, our increasing dependence on such complex (AI) systems, and the fact that when such systems don't work "as intended", bad things tend to happen. Most troubling, even if they do work as intended, AI systems can be extremely dangerous in the wrong hands, and things look worse if AI gets out of human hands.

Telepresence doesn't quite cut it: Multimodal Challenges in Virtual and Shared Reality

The Star Trek Holodeck experience was pitched as the ultimate in VR environments, perhaps embodying the dream of true telepresence, in which users receive the full sensory experience of being in another location. However, most so-called "telepresence" systems today offer little more than high-resolution displays, as if all one needs to achieve the illusion is Skype on a big screen. Despite the hype, such systems generally fail to deliver a convincing level of co-presence between users and come nowhere close to providing the sensory fidelity or supporting the expressive cues and manipulation capabilities we take for granted with objects in the physical world.

The Star Trek Holodeck experience was pitched as the ultimate in VR environments, perhaps embodying the dream of true telepresence, in which users receive the full sensory experience of being in another location. However, most so-called "telepresence" systems today offer little more than high-resolution displays, as if all one needs to achieve the illusion is Skype on a big screen. Despite the hype, such systems generally fail to deliver a convincing level of co-presence between users and come nowhere close to providing the sensory fidelity or supporting the expressive cues and manipulation capabilities we take for granted with objects in the physical world.

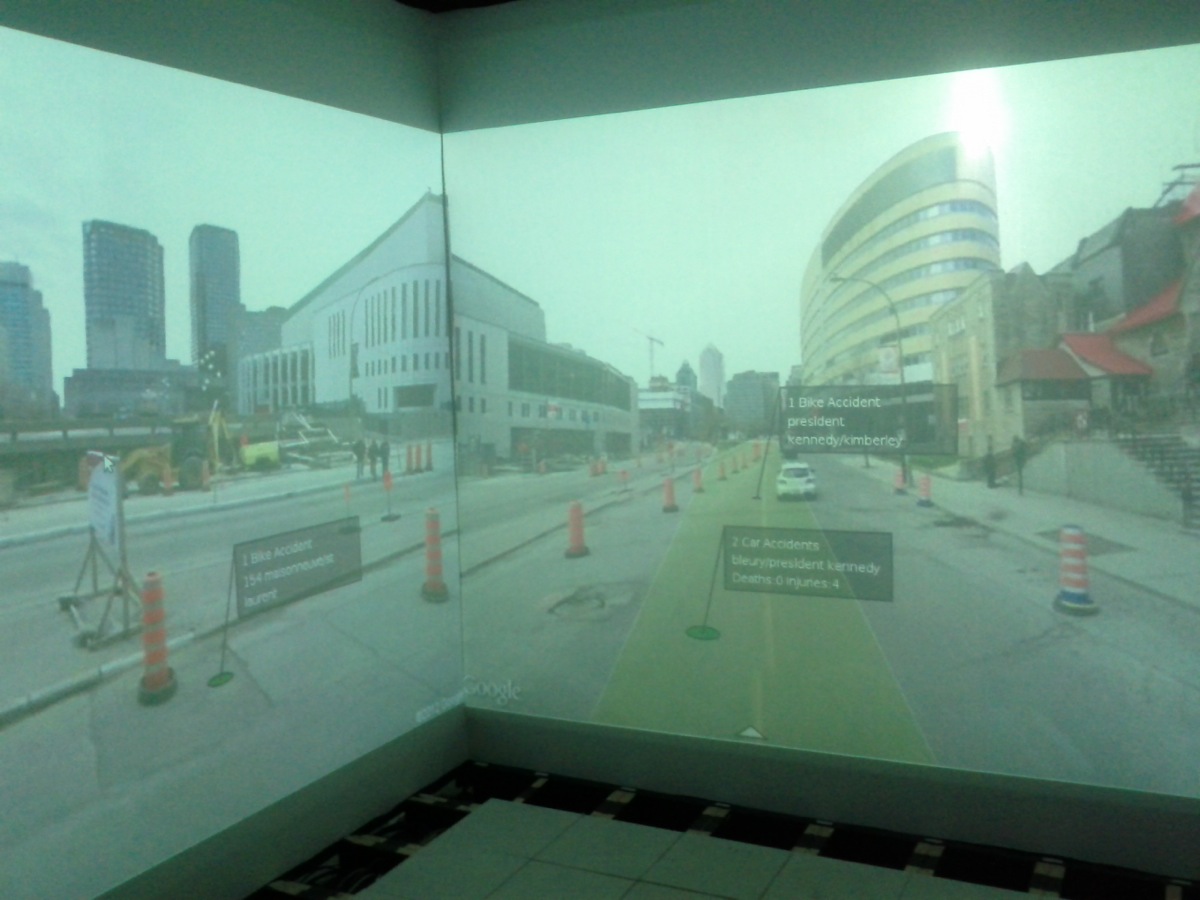

My lab's objectives in this domain are to simulate a high-fidelity representation of remote or synthetic environments, conveying the sights, sounds, and sense of touch in a highly convincing manner, allowing users, for example, to collaborate with each other as if physically sharing the same space. Achieving this goal presents challenges along the entire signal path, including sensory acquisition, signal processing, data transmission, display technologies, and an understanding of the role of multimodality in perception. This talk surveys some of our research in these areas and demonstrates several applications arising from this work, including support of environmental awareness for the blind community, remote medical training, multimodal synthesis of ground surfaces, and low-latency cross-continental distributed jazz jams. Several videos will be presented, illustrating early successes, as well as some of the interesting behavioral results we have observed in a number of application domains.

Why data plans have value beyond text and surfing

When we think of smartphones, we picture getting our email at all hours, Twitter updates and Plants vs. Zombies. But using the sensor, display, and wireless capabilities found in current devices, we can change people's lives. This talk illustrates with example applications we're developing around mobile technology that range from helping rehabilitation patients walk safely, letting blind users discover what's around them while walking down the street, helping emergency response personnel gain an understanding of a crisis situation, and even curing amblyopia without the stigma of traditional eye-patch therapy. At the same time, there remain some exciting research challenges ahead, including compensating for the limits of smartphone sensor reliability and leveraging the increasing power of massive networking to go far beyond where we are today.

When we think of smartphones, we picture getting our email at all hours, Twitter updates and Plants vs. Zombies. But using the sensor, display, and wireless capabilities found in current devices, we can change people's lives. This talk illustrates with example applications we're developing around mobile technology that range from helping rehabilitation patients walk safely, letting blind users discover what's around them while walking down the street, helping emergency response personnel gain an understanding of a crisis situation, and even curing amblyopia without the stigma of traditional eye-patch therapy. At the same time, there remain some exciting research challenges ahead, including compensating for the limits of smartphone sensor reliability and leveraging the increasing power of massive networking to go far beyond where we are today.

What's around me? Audio augmented reality for blind users with a smartphone

Autour is an eyes-free mobile system designed to give blind users a better sense of their surroundings. The app allows users to explore an urban area without necessarily having a particular destination in mind, and gain a rich impression of their environment through a spatial audio description of the scene. Once users notice a point of interest, additional details are available on demand. In this manner, our goal is to use sound to reveal the kind of information that visual cues such as neon signs provide to sighted users. Autour is distinguished both from other assistive navigation apps, which emphasize turn-by-turn directions, and other purely video-based systems, which require users to point the camera in the direction of objects of interest to learn more about them. Instead, Autour emphasizes the use of pre-existing, worldwide, point of interest (POI) databases, and a means of rendering a description of the environment, through commodity smartphone hardware, that is superior to a naive playback of spoken text.

This presentation describes some of the interface design challenges and safety concerns we faced, along with technical aspects of our implementation as relevant to achieving a system that was practical for wide-scale deployment. Feedback from blind users is discussed, along with evolution of the interface in response to this feedback. Finally, a summary is presented of our ongoing research to develop real-time "Walking Straight" guidance for anti-veering behaviour while crossing at pedestrian intersections, our experimental efforts to personalize Autour's description of the environment to what is relevant to each user, and the use of computer vision to provide descriptions of the environment, in particular for indoor environments.

Autour is an eyes-free mobile system designed to give blind users a better sense of their surroundings. The app allows users to explore an urban area without necessarily having a particular destination in mind, and gain a rich impression of their environment through a spatial audio description of the scene. Once users notice a point of interest, additional details are available on demand. In this manner, our goal is to use sound to reveal the kind of information that visual cues such as neon signs provide to sighted users. Autour is distinguished both from other assistive navigation apps, which emphasize turn-by-turn directions, and other purely video-based systems, which require users to point the camera in the direction of objects of interest to learn more about them. Instead, Autour emphasizes the use of pre-existing, worldwide, point of interest (POI) databases, and a means of rendering a description of the environment, through commodity smartphone hardware, that is superior to a naive playback of spoken text.

This presentation describes some of the interface design challenges and safety concerns we faced, along with technical aspects of our implementation as relevant to achieving a system that was practical for wide-scale deployment. Feedback from blind users is discussed, along with evolution of the interface in response to this feedback. Finally, a summary is presented of our ongoing research to develop real-time "Walking Straight" guidance for anti-veering behaviour while crossing at pedestrian intersections, our experimental efforts to personalize Autour's description of the environment to what is relevant to each user, and the use of computer vision to provide descriptions of the environment, in particular for indoor environments.

Evolving consumer activism in the digital world

There was a time, not so long ago, when a disappointed customer with a

beef could do little more than send a letter of complaint to the

company, possibly the Better Business Bureau, and then grumble to

friends and family if subsequently ignored.

There was a time, not so long ago, when a disappointed customer with a

beef could do little more than send a letter of complaint to the

company, possibly the Better Business Bureau, and then grumble to

friends and family if subsequently ignored.

Enter the age of Internet. Evolving from the early practices of

Usenet posts and mass-email in the 1990s to so-called "rogue sites",

caustic blogs, and viral YouTube videos throughout the next decade,

on-line consumer activism is now so firmly entrenched as a part of the

Web that few companies and products have escaped on-line pillorying,

easily found by anyone searching for information.

This talk presents a personal perspective from my years of on-line

consumer activism. I begin with a summary of the stages of a

relationship breakdown between company and consumer, identifying the

missed opportunties for image- and loyalty-building along the way.

The first lesson is simple: don't ignore negative feedback or

discourage complaints. This point is illustrated with a brief history

of Untied.com, the website that effectively gave birth to the on-line

"travel complaints industry", along with a number of anecdotes dealing

with other companies.

The second, more subtle lesson, but of far greater importance, is that

corporate leadership (and university administrations) can benefit from

such negative feedback, depending on how they respond. Criticism

affords tremendous insight into consumer or employee opinion.

Moreover, it often indicates that there is a constituency who hopes

the company will turn its mistakes around. By becoming honest, active

participants in the dialogue with the "complainers", the corporation

not only demonstrates its worthiness of being given a second chance,

but also helps foster meaningful exchange with its most valuable

assets, namely its employees and customer base.

From Rehearsal to Performance: Ensemble Learning in Open Orchestra and Distributed Rehearsal for World Opera

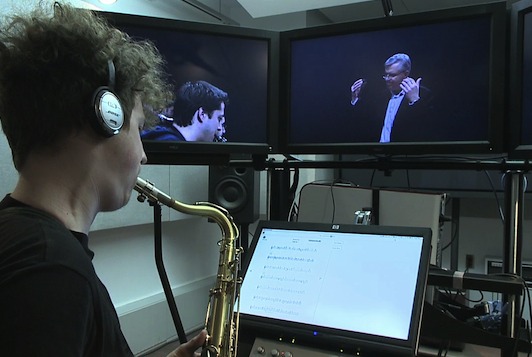

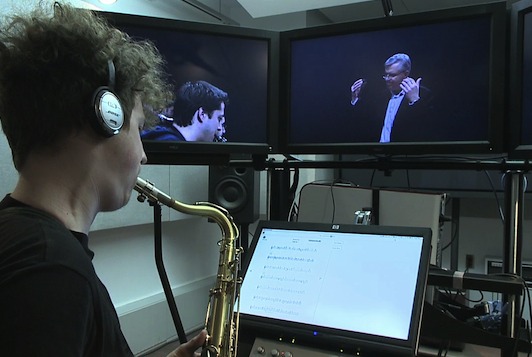

Open Orchestra serves as the musical equivalent of an aircraft simulator, providing the musician the immersive experience of playing with an orchestra. The system combines the high-fidelity experience of the ensemble rehearsal or performance, with the convenience and flexibility of solo study, overcoming the limitations of time, availability of other performers, and space. Students at multiple locations can rehearse in front of a three-screen immersive display, in which previously recorded audio and video is rendered from the perspective of that performer in the orchestra. Open Orchestra builds on this theme to support the pedagogical objective of both automated (signal analysis) and human (instructor) feedback on individual recordings, as needed to improve one's performance.

Open Orchestra serves as the musical equivalent of an aircraft simulator, providing the musician the immersive experience of playing with an orchestra. The system combines the high-fidelity experience of the ensemble rehearsal or performance, with the convenience and flexibility of solo study, overcoming the limitations of time, availability of other performers, and space. Students at multiple locations can rehearse in front of a three-screen immersive display, in which previously recorded audio and video is rendered from the perspective of that performer in the orchestra. Open Orchestra builds on this theme to support the pedagogical objective of both automated (signal analysis) and human (instructor) feedback on individual recordings, as needed to improve one's performance.

In a similar vein, as a step toward its objectives of incorporating the highest quality of audio and video over research networks in support of a live, multi-site opera performance, the World Opera Project has been developing the tools and methodology for practice that train instrumentalists and singers in the use of this new networked medium. This entails consideration of acquisition and display hardware, signal processing, and strategies not only to minimize latency but also to incorporate the effects of latency into the resulting work.

This presentation addresses these distributed scenarios for distance learning of music, both focused on supporting the demands of ensemble performance, and discusses some of the lessons learned from recent experiments intended to evaluate the pedagogical benefits of the technologies and their implications to future performance. Video demonstrations of sample learning scenarios will also be shown.

More bandwidth please! Why everyone's missing the big picture

on video

McGill's

Ultra-Videoconferencing effort

grew out of earlier pioneering efforts in multichannel audio network

transmission, accompanied by MPEG video. While the limited quality of

MPEG streams detracted from the experience of remote events, the more

serious limitation was that of encoding latency. This prevented

effective interaction in bidirectional communication

(videoconferencing) scenarios, in particular for distributed musical

performance. These factors motivated our use of uncompressed video,

initially analog, and later digital, for transmissions with higher

quality and minimal delay.

McGill's

Ultra-Videoconferencing effort

grew out of earlier pioneering efforts in multichannel audio network

transmission, accompanied by MPEG video. While the limited quality of

MPEG streams detracted from the experience of remote events, the more

serious limitation was that of encoding latency. This prevented

effective interaction in bidirectional communication

(videoconferencing) scenarios, in particular for distributed musical

performance. These factors motivated our use of uncompressed video,

initially analog, and later digital, for transmissions with higher

quality and minimal delay.

This talk surveys the recent history of our research activities at

McGill in networked audiovisual communications for cultural,

corporate, clinical, and classroom use. Each of these settings

imposes different demands often unmet by currently available systems.

Distributed musical performance needs extremely low latency, medical

diagnosis requires attention to physical configuration, while engaging

seminars and conference meetings demand life-size views of the

participants. Common to all of these examples is the demands for

greater bandwidth: while research networks are now generally "good

enough" for many of these applications, this is in large part thanks

to improved quality of codecs. Even still, at the upper end of the

spectrum, for latency-critical applications such as distributed

musical performance, teleoperation, and interactive gaming, where

"every millisecond counts" in avoiding the distinction between "here"

and "there", even a gigabit per second may not suffice.

While telepresence technologies have largely focused on video and audio, our lab's research emphasizes the added value that haptics, our sense of touch, brings to the experience. This is not only the case for hand contact, but equally true for the wealth of information we gain about the world, through our feet, as we walk about our everyday environments. We have focused extensively on understanding properties of haptic perception, and use this knowledge to develop technologies that improve our ability to render information haptically to users, in particular, for tasks in which visual attention is occupied. This talk illustrates several examples, including immersive telepresence exploration of a city, simulation of the multimodal experience of walking on ice and snow, and our efforts to lower the noise level of the hospital OR and ICU by replacing audio alarms with haptic rendering of patients' vital signs.

While telepresence technologies have largely focused on video and audio, our lab's research emphasizes the added value that haptics, our sense of touch, brings to the experience. This is not only the case for hand contact, but equally true for the wealth of information we gain about the world, through our feet, as we walk about our everyday environments. We have focused extensively on understanding properties of haptic perception, and use this knowledge to develop technologies that improve our ability to render information haptically to users, in particular, for tasks in which visual attention is occupied. This talk illustrates several examples, including immersive telepresence exploration of a city, simulation of the multimodal experience of walking on ice and snow, and our efforts to lower the noise level of the hospital OR and ICU by replacing audio alarms with haptic rendering of patients' vital signs.

The growth of wearable computing brings with it opportunities to leverage body-worn sensors and actuators, endowing us with capabilities that extend us as human beings. In the scope of my lab's work with video, audio, and haptic modalities, we have explored a number of applications that help to compensate for or overcome human sensory limitations. Examples include improved situational awareness for both emergency responders and the visually impaired, treatment of amblyopia, balance and directional guidance, and visceral awareness of remote activity. These applications impose minimal input requirements due to their limited need for interaction by the mobile user. However, more general-purpose mobile computing, involving richer forms of manipulation of digital content, require alternative display technologies and motivate new ways of interacting with this information that break free from the limited real estate of small screen displays.

The growth of wearable computing brings with it opportunities to leverage body-worn sensors and actuators, endowing us with capabilities that extend us as human beings. In the scope of my lab's work with video, audio, and haptic modalities, we have explored a number of applications that help to compensate for or overcome human sensory limitations. Examples include improved situational awareness for both emergency responders and the visually impaired, treatment of amblyopia, balance and directional guidance, and visceral awareness of remote activity. These applications impose minimal input requirements due to their limited need for interaction by the mobile user. However, more general-purpose mobile computing, involving richer forms of manipulation of digital content, require alternative display technologies and motivate new ways of interacting with this information that break free from the limited real estate of small screen displays.

I summarize several concerns, as expressed by noted technology visionaries such as Bill Joy, Elon Musk, and Eliezer Yudkowsky, concerning the dangers, both short- and long-term, posed to humanity by artificial intelligence. I argue that Mark Weiser's warnings regarding the technologist's responsibilities when inventing socially dangerous technology are particular relevant in the domain of AI, given the combination of risks that this technology poses. Specifically, I cite our past failures in building complex systems that reliably work as intended, our increasing dependence on such complex (AI) systems, and the fact that when such systems don't work "as intended", bad things tend to happen. Most troubling, even if they do work as intended, AI systems can be extremely dangerous in the wrong hands, and things look worse if AI gets out of human hands.

I summarize several concerns, as expressed by noted technology visionaries such as Bill Joy, Elon Musk, and Eliezer Yudkowsky, concerning the dangers, both short- and long-term, posed to humanity by artificial intelligence. I argue that Mark Weiser's warnings regarding the technologist's responsibilities when inventing socially dangerous technology are particular relevant in the domain of AI, given the combination of risks that this technology poses. Specifically, I cite our past failures in building complex systems that reliably work as intended, our increasing dependence on such complex (AI) systems, and the fact that when such systems don't work "as intended", bad things tend to happen. Most troubling, even if they do work as intended, AI systems can be extremely dangerous in the wrong hands, and things look worse if AI gets out of human hands.

The Star Trek Holodeck experience was pitched as the ultimate in VR environments, perhaps embodying the dream of true telepresence, in which users receive the full sensory experience of being in another location. However, most so-called "telepresence" systems today offer little more than high-resolution displays, as if all one needs to achieve the illusion is Skype on a big screen. Despite the hype, such systems generally fail to deliver a convincing level of co-presence between users and come nowhere close to providing the sensory fidelity or supporting the expressive cues and manipulation capabilities we take for granted with objects in the physical world.

The Star Trek Holodeck experience was pitched as the ultimate in VR environments, perhaps embodying the dream of true telepresence, in which users receive the full sensory experience of being in another location. However, most so-called "telepresence" systems today offer little more than high-resolution displays, as if all one needs to achieve the illusion is Skype on a big screen. Despite the hype, such systems generally fail to deliver a convincing level of co-presence between users and come nowhere close to providing the sensory fidelity or supporting the expressive cues and manipulation capabilities we take for granted with objects in the physical world. When we think of smartphones, we picture getting our email at all hours, Twitter updates and Plants vs. Zombies. But using the sensor, display, and wireless capabilities found in current devices, we can change people's lives. This talk illustrates with example applications we're developing around mobile technology that range from helping rehabilitation patients walk safely, letting blind users discover what's around them while walking down the street, helping emergency response personnel gain an understanding of a crisis situation, and even curing amblyopia without the stigma of traditional eye-patch therapy. At the same time, there remain some exciting research challenges ahead, including compensating for the limits of smartphone sensor reliability and leveraging the increasing power of massive networking to go far beyond where we are today.

When we think of smartphones, we picture getting our email at all hours, Twitter updates and Plants vs. Zombies. But using the sensor, display, and wireless capabilities found in current devices, we can change people's lives. This talk illustrates with example applications we're developing around mobile technology that range from helping rehabilitation patients walk safely, letting blind users discover what's around them while walking down the street, helping emergency response personnel gain an understanding of a crisis situation, and even curing amblyopia without the stigma of traditional eye-patch therapy. At the same time, there remain some exciting research challenges ahead, including compensating for the limits of smartphone sensor reliability and leveraging the increasing power of massive networking to go far beyond where we are today.

There was a time, not so long ago, when a disappointed customer with a

beef could do little more than send a letter of complaint to the

company, possibly the Better Business Bureau, and then grumble to

friends and family if subsequently ignored.

There was a time, not so long ago, when a disappointed customer with a

beef could do little more than send a letter of complaint to the

company, possibly the Better Business Bureau, and then grumble to

friends and family if subsequently ignored.

McGill's

McGill's